-

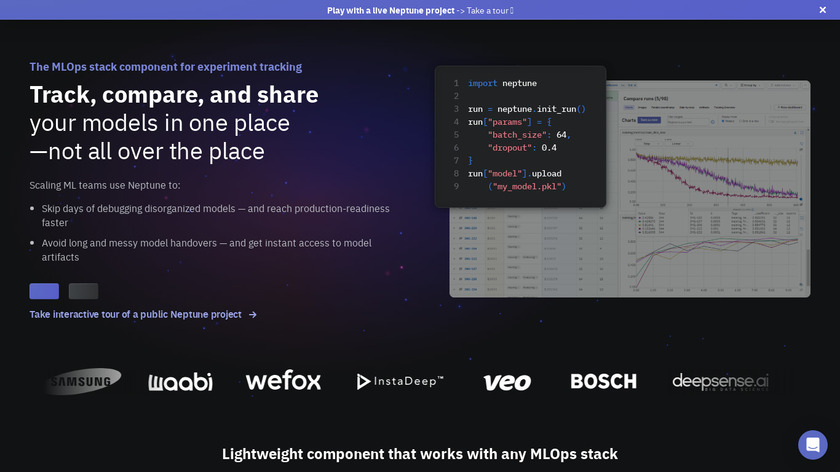

Neptune brings organization and collaboration to data science projects. All the experiement-related objects are backed-up and organized ready to be analyzed and shared with others. Works with all common technologies and integrates with other tools.Pricing:

- Open Source

- Freemium

- Free Trial

Experiment tracking tools like MLflow, Weights and Biases, and Neptune.ai provide a pipeline that automatically tracks meta-data and artifacts generated from each experiment you run. Although they have varying features and functionalities, experiment tracking tools provide a systematic structure that handles the iterative model development approach.

#Data Science And Machine Learning #Data Science Notebooks #Machine Learning Tools 23 social mentions

-

Minio is an open-source minimal cloud storage server.

The meta-data and model artifacts from experiment tracking can contain large amounts of data, such as the training model files, data files, metrics and logs, visualizations, configuration files, checkpoints, etc. In cases where the experiment tool doesn't support data storage, an alternative option is to track the training and validation data versions per experiment. They use remote data storage systems such as S3 buckets, MINIO, Google Cloud Storage, etc., or data versioning tools like data version control (DVC) or Git LFS (Large File Storage) to version and persist the data. These options facilitate collaboration but have artifact-model traceability, storage costs, and data privacy implications.

#Cloud Storage #Cloud Computing #Object Storage 156 social mentions

-

Git Large File Storage (LFS) replaces large files such as audio samples, videos, datasets, and graphics with text pointers.Pricing:

- Open Source

The meta-data and model artifacts from experiment tracking can contain large amounts of data, such as the training model files, data files, metrics and logs, visualizations, configuration files, checkpoints, etc. In cases where the experiment tool doesn't support data storage, an alternative option is to track the training and validation data versions per experiment. They use remote data storage systems such as S3 buckets, MINIO, Google Cloud Storage, etc., or data versioning tools like data version control (DVC) or Git LFS (Large File Storage) to version and persist the data. These options facilitate collaboration but have artifact-model traceability, storage costs, and data privacy implications.

#Git #Development #Code Collaboration 101 social mentions

-

Docker Hub is a cloud-based registry servicePricing:

- Open Source

Configure a container registry such as Docker hub or GitHub container registry.

#Developer Tools #Code Collaboration #Git 314 social mentions

-

Amazon S3 is an object storage where users can store data from their business on a safe, cloud-based platform. Amazon S3 operates in 54 availability zones within 18 graphic regions and 1 local region.

The meta-data and model artifacts from experiment tracking can contain large amounts of data, such as the training model files, data files, metrics and logs, visualizations, configuration files, checkpoints, etc. In cases where the experiment tool doesn't support data storage, an alternative option is to track the training and validation data versions per experiment. They use remote data storage systems such as S3 buckets, MINIO, Google Cloud Storage, etc., or data versioning tools like data version control (DVC) or Git LFS (Large File Storage) to version and persist the data. These options facilitate collaboration but have artifact-model traceability, storage costs, and data privacy implications.

#Cloud Hosting #Object Storage #Cloud Storage 175 social mentions

Discuss: A step-by-step guide to building an MLOps pipeline

Related Posts

Simplyblock as alternative to Ceph: A Comprehensive Comparison

simplyblock.io // about 1 year ago

Best MEGA Alternatives in 2024 : These 5 Are Much Better!

01net.com // about 1 month ago

Best Free Cloud Storage for 2024: What Cloud Storage Providers Offer the Most Free Storage?

cloudwards.net // 3 months ago

Best Top 12 MEGA Alternatives in 2024

multcloud.com // 8 months ago

Top 10+ Alternatives to DigitalOcean

blog.back4app.com // about 3 years ago

Top 10 Node JS Hosting Companies

blog.back4app.com // over 2 years ago